DeepSeek is a family of open-source LLMs developed by the Chinese company DeepSeek AI. These models are designed for tasks like text generation, summarization, and question answering. Popular variants include:

deepseek-llm-7b-chat: A 7-billion parameter model optimized for conversational tasks.deepseek-math-7b: Fine-tuned for mathematical reasoning.- Smaller models like

deepseek-1.3bfor low-resource environments.

Why Run DeepSeek Locally?

- Privacy: Process sensitive data without relying on cloud APIs.

- Offline Access: Use the model without an internet connection.

- Customization: Fine-tune the model for specific tasks.

- Cost Savings: Avoid API fees for frequent usage.

Hardware Requirements

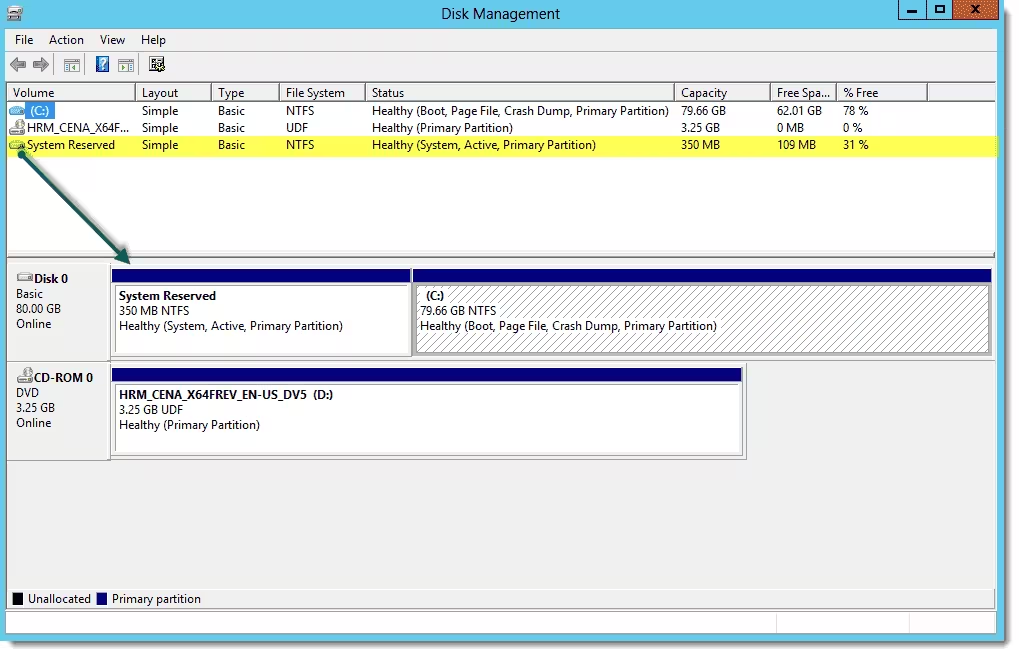

| Component | Minimum (7B Model) | Recommended (7B Model) |

|---|---|---|

| RAM | 16 GB | 32 GB |

| VRAM (GPU) | 8 GB | 16 GB (e.g., RTX 4080) |

| Storage | 15 GB (FP16) | 30 GB (unquantized) |

Notes:

- For CPU-only setups, use quantized models (GGUF/GGML) to reduce memory usage.

- Smaller models (e.g.,

deepseek-1.3b) require fewer resources.

Installation Steps

Install Python and Dependencies

- Download Python 3.10+

- Visit python.org.

- Check “Add Python to PATH” during installation.

- Verify Installation

Open PowerShell and run:

python --version # Should show Python 3.10+

pip --version # Ensure pip is installedSet Up a Virtual Environment

- Create a Project Folder

mkdir deepseek-project && cd deepseek-project- Create and Activate a Virtual Environment

python -m venv venv

.\venv\Scripts\activate # Activates the environmentInstall PyTorch and Transformers

- Install PyTorch

- For NVIDIA GPU (CUDA 12.1):

bash pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121 - For CPU-only:

bash pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

- Install Hugging Face Libraries

pip install transformers accelerate huggingface_hubDownload the DeepSeek Model

- Create a Hugging Face Account

- Sign up at huggingface.co.

- Generate an access token under Settings > Access Tokens.

- Log In via CLI

huggingface-cli login

Paste your token when prompted.

- Download the Model

Use Python code to load the model.

Running the Model

Basic Inference Script

Create a file deepseek_demo.py:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "deepseek-ai/deepseek-llm-7b-chat"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto") # Auto-detects GPU

prompt = "Explain quantum computing in simple terms."

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=200)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))Run the script:

python deepseek_demo.pyOptimizing Performance

Quantization (Reduce Memory Usage)

Use the bitsandbytes library for 4-bit quantization:

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto",

load_in_4bit=True # Requires `bitsandbytes`

)

Install bitsandbytes:

pip install bitsandbytesCPU Optimization

For GGUF quantized models:

- Install

llama-cpp-python:

pip install llama-cpp-python- Download a GGUF model from Hugging Face (e.g., TheBloke/deepseek-llm-7B-chat-GGUF).

Alternative Methods

Using Ollama

If DeepSeek is added to Ollama’s library:

- Install Ollama for Windows.

- Run:

ollama run deepseek-7bLM Studio

A user-friendly GUI for running local LLMs:

- Download LM Studio.

- Search for “DeepSeek” in the app and download the model.

Troubleshooting

| Issue | Solution |

|---|---|

| CUDA Out of Memory | Use a smaller model or enable quantization. |

| Slow CPU Inference | Use GGUF quantization with n_threads=8. |

| Model Not Loading | Check Hugging Face token permissions. |

| Dependency Errors | Update packages with pip install --upgrade. |

FAQs

Can I use DeepSeek commercially?

Check the model’s license on Hugging Face. Most DeepSeek models are research-only.

How does DeepSeek compare to Llama 3?

DeepSeek specializes in coding and mathematical tasks, while Llama 3 is more general-purpose.

Is a GPU mandatory?

No, but CPU inference will be significantly slower.

How do I fine-tune DeepSeek?

Use Hugging Face’s Trainer class with a custom dataset.

Conclusion

Running DeepSeek locally on Windows 11 is straightforward with tools like Hugging Face Transformers. For best performance, use a GPU with quantization or opt for smaller models. Explore alternative tools like LM Studio for a no-code experience.

Next Steps:

- Experiment with fine-tuning using custom datasets.

- Join the DeepSeek community for updates.

- Explore quantized models for resource-constrained setups.