The cut command in Linux is a text processing utility designed to extract specific sections (columns) from each line of files or input streams. It allows users to “cut out” selected portions of text based on byte position, character position, or field position (when using a delimiter).

Contents

- Extract Fields by Delimiter (e.g., CSV)

- Extract a Single Column from a Space-Delimited File

- Extract Characters by Position

- Extract Bytes from a Binary/Text File

- Split /etc/passwd by Colon (:)

- Use cut with Command Output (e.g., ls -l)

- Suppress Errors for Missing Delimiters

- Invert Selection (Exclude Fields)

- Extract Fixed-Width Fields (No Delimiter)

- Combine with grep and sort

- Advanced Examples

This makes it particularly useful for working with structured text files like CSV files, logs with consistent formatting, or any text with predictable patterns.

Extract Fields by Delimiter (e.g., CSV)

cut -d ',' -f 1,3 data.csv-d ',': Uses comma as the delimiter.-f 1,3: Selects the first and third fields (columns).

Extract a Single Column from a Space-Delimited File

cut -d ' ' -f 2 logfile.txt- Splits lines by spaces and extracts the second field.

Extract Characters by Position

cut -c 1-5 names.txt-c 1-5: Displays the first 5 characters of each line.

Extract Bytes from a Binary/Text File

cut -b 10-20 file.bin-b 10-20: Shows bytes 10 to 20 of the file.

Split /etc/passwd by Colon (:)

cut -d ':' -f 1,6 /etc/passwd- Extracts the username (1st field) and home directory (6th field).

Use cut with Command Output (e.g., ls -l)

ls -l | cut -d ' ' -f 5-- Extracts file sizes and names from

ls -l(fields 5 and onward).

Suppress Errors for Missing Delimiters

cut -s -d ',' -f 2 file.txt-s: Skips lines without the delimiter (prevents error messages).

Invert Selection (Exclude Fields)

cut --complement -d ',' -f 2 data.csv- Excludes the second field and displays all others.

Extract Fixed-Width Fields (No Delimiter)

cut -c 1-10,20-30 fixed_width.txt- Selects characters 1–10 and 20–30 from each line.

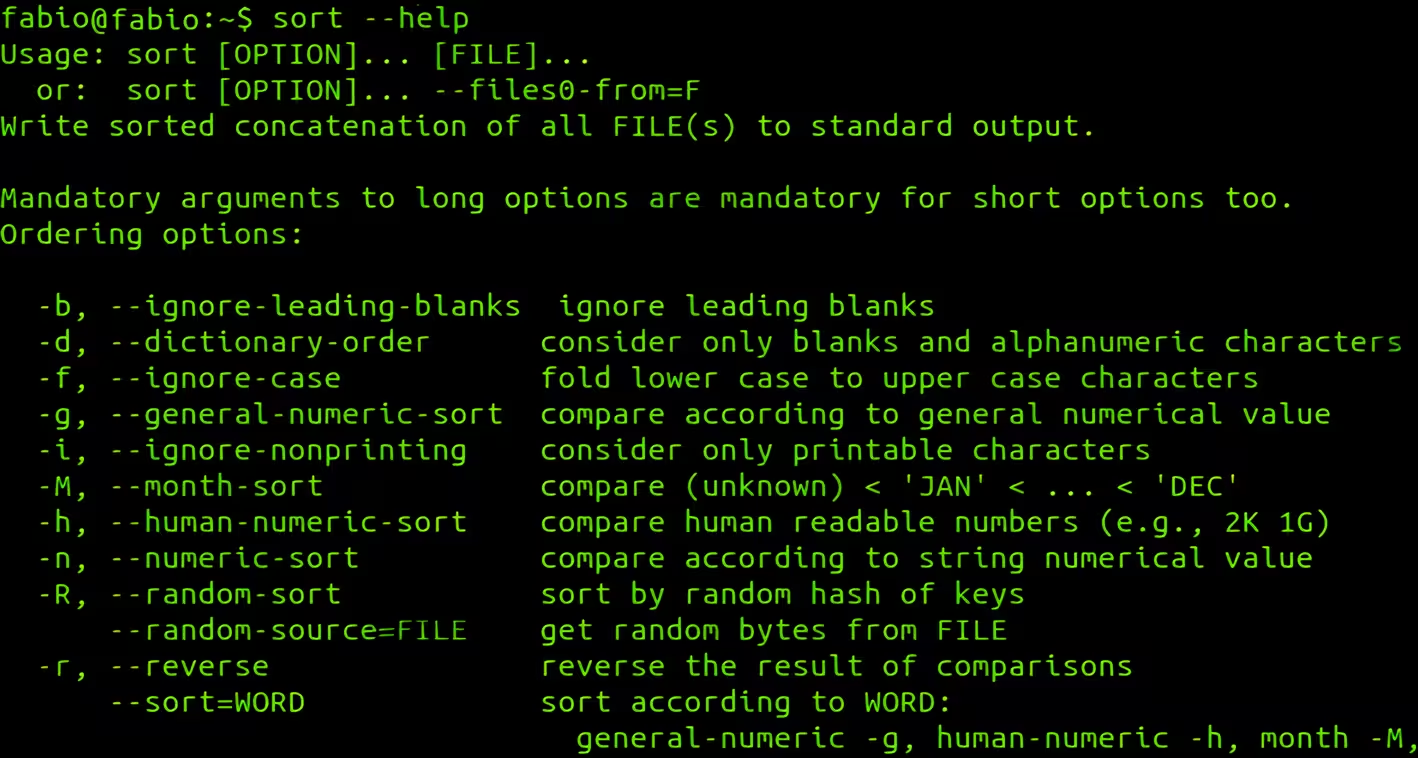

Combine with grep and sort

grep "ERROR" app.log | cut -d ' ' -f 3 | sort -u- Extracts the third field from error lines and sorts unique values.

Advanced Examples

Get a list of all shell users (with real shells)

grep -v "nologin\|false" /etc/passwd | cut -d: -f1,7Extract columns from fixed-width data

cut -c1-10,15-25,30-40 fixed_width_data.txtFind the top 5 largest directories (by item count)

find . -type d -name "*" | while read dir; do echo "$(ls -1 "$dir" | wc -l) $dir"; done | sort -rn | head -5 | cut -d' ' -f2-Parse and extract specific fields from JSON (with helpers)

cat data.json | jq -r '.users[] | [.name, .email] | @csv' | cut -d, -f1Key Notes:

- Delimiters: Use

-dfor columns (e.g.,-d ','for CSV). - Field vs. Character Mode:

-fselects fields,-cselects characters. - Limitations:

cutcannot handle multi-character delimiters (useawkfor complex parsing).